Kernun UTM's HTTP proxy provides several methods that can be used to change

requestURIs passed to servers and make decisions based on URIs. A URI can be

matched against regular expressions, searched in a local database

(blacklist), or evaluated by the Kernun Clear Web DataBase or by an

external Web filter. The sample configuration

/usr/local/kernun/conf/samples/cml/url-filter.cml

contains an HTTP proxy with all URI filtering methods.

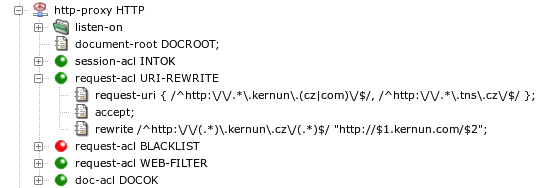

An example of the HTTP proxy that matches HTTP requests by the

request URI is depicted in Figure 5.71, “Request URI matching and rewriting”. If a request URI

matches one of the regular expressions in the string specified in the

request-uri item of

request-acl URI-REWRITE, this ACL will be selected.

It accepts the request and applies the rewrite item(s). There

can be several rewrite items. Each of them contains

a regular expression and a string. A request URI that matches the regular

expression in a rewrite clause will be rewritten to the

string. Pairs of characters dollar+digit ($1,

$2…) in the string will be replaced by parts

of the original URI matched by parenthesized parts of the regular

expressions. The rewrite item in Figure 5.71, “Request URI matching and rewriting” matches any URI containing

kernun.cz and rewrites cz to

com, leaving the rest of the URI unchanged.

In a request-acl, there are two more types of

URI-matching conditions. We have already seen

request-uri, which matches the whole URI. The other

conditions are: request-scheme (matches the scheme part

of the URI) and request-path (matches the path part of

the URI).

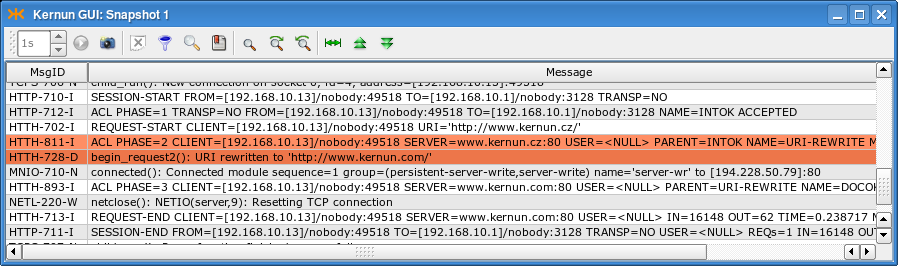

Figure 5.72, “Log of URI matching and rewriting” shows a log of an HTTP proxy

running with the configuration from Figure 5.71, “Request URI matching and rewriting” and

processing a request with URI http://www.kernun.cz/.

The two most important messages are marked. The

HTTH-811-I message informs that

request-acl URI-REWRITE was selected. The following

message confirms that the URI was rewritten to

http://www.kernun.com/.

A security policy may require to deny access to large groups of Web

servers. Adding a very large number of servers to

a request-acl.request-uri item may slow down ACL

processing significantly. A better strategy is to create

a blacklist of forbidden servers in a special

format processed more efficiently by the HTTP proxy. A blacklist must be

prepared in a text file in the format described in

mkblacklist(1). The textual blackist is then

transferred to a binary form usable by the HTTP proxy by utilities

resolveblacklist(1) and

mkblacklist(1). A binary blacklist file can be

converted back to the text format by

printblacklist(1).

A blacklist contains a list of Web server addresses (domain

names or IP addresses) with an optional initial part of a request path.

A list of categories is assigned to each address. It is possible to select

a request-acl based on a matching

blacklist item with the set of categories assigned to the

request URI by the blacklist.

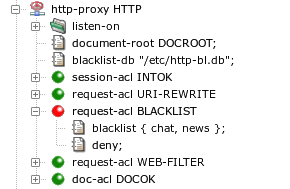

An HTTP proxy will use the blacklist database file specified by

blacklist-db in the http-proxy section,

see Figure 5.73, “A blacklist in the HTTP proxy”. If a request URI belongs to

the category chat or news, then

request-uri BLACKLIST will be selected and the

request will be denied. Other requests will be processed according to

request-uri WEB-FILTER, described in the next

section.

Note

If a proxy runs in chroot, the path

blacklist-db will be interpreted in the context of the

chroot directory.

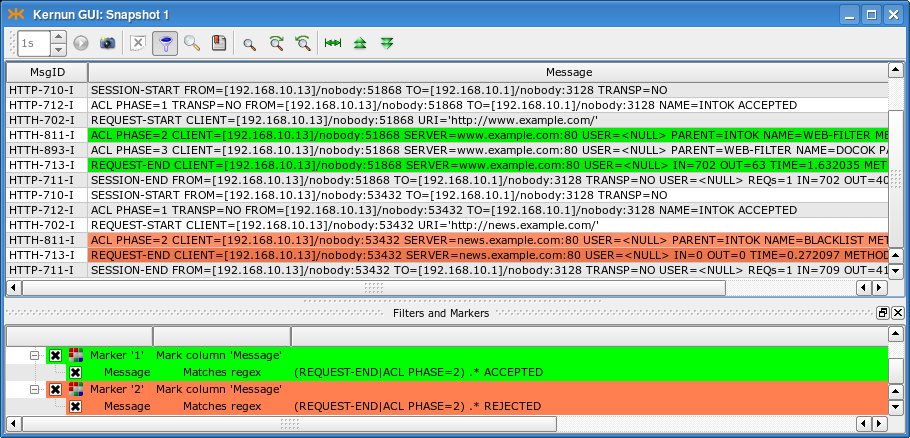

A log of an HTTP proxy configured according to Figure 5.73, “A blacklist in the HTTP proxy” is displayed in Figure 5.74, “A log of blacklist usage in the HTTP proxy”. The log messages are related to two

requests. The first request to server www.example.com does not match

categories selected in request-acl BLACKLIST and the

request is accepted by request-acl WEB-FILTER. The

second request to

news.example.com matches

a category from request-acl BLACKLIST, which rejects

the request. Note the ACL names reported in

ACL PHASE=2 messages and the log

markers that colorize accepting and rejecting messages.

Continuous updating of a blacklist so that it covers as many prospective unwanted Web servers as possible is a challenging task, greatly exceeding the capabilities of a typical company network administrator. Therefore, there are commercially available services that provide regularly updated databases of Web servers classified into many categories according to content. Kernun UTM contains one such service—the Kernun Clear Web DataBase.

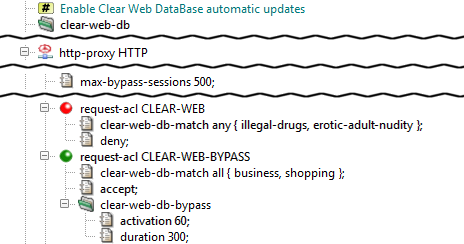

Configuration of the Kernun Clear Web DataBase consists of two parts. One is used to detect URL categories and select ACLs according to the categories, the other deals with updating of the database of URLs.

The Clear Web DataBase takes a request URI and assigns to it a set

of categories. The categories can be matched by item

request-acl.clear-web-db-match in the process of

request-acl selection during an HTTP request processing.

Two examples of sections request-acl with condition

clear-web-db-match are shown in Figure 5.75, “Kernun Clear Web DataBase in the HTTP proxy”. There are three possible modes of

category matching, selectable in each condition item:

any— at least one category of the request URI matches the set of categories in the condition;all— all categories in the condition are contained in the set of categories which the request URI belongs to;exact— the request URI belongs to all the categories from the condition and does not belong to any additional category.

If the selected request-acl contains

a clear-web-db-match condition

(CLEAR-WEB in the sample configuration) and the

deny item, the proxy will return an error HTML page

containing information that the request has been denied by the Clear Web

DataBase and the list of matching categories.

As an alternative to denying a request URI matching given categories

absolutely, the proxy provides the bypass feature, a

sort of “soft deny”. It is activated by item

request-acl.clear-web-db-bypass (used in

CLEAR-WEB-BYPASS in the sample configuration). When

a user attempts to access a Web server restricted by the bypass, an error

page is returned by the proxy, containing a link, through which

the user can obtain limited-time access to the requested server and all

Web servers that belong to the same categories. After the bypass

expiration time, which can be changed using item duration,

the error page will be displayed again. The user can then reactivate the

bypass. Each bypass activation is logged.

Bypass sessions activated by users are tracked by an internal

table managed by the proxy. The maximum number of simultaneously active

bypass sessions is controlled by

http-proxy.max-bypass-sessions. If this number is set to

0, the number of bypass sessions will become unlimited, and the sessions

will be tracked by cookies. In this case, an activated bypass is valid for

the target server and all servers in the same domain, but not for other

servers, even if they belong to the same categories.

Clients often use the HTTP proxy also for access to intranet WWW

servers. These internal servers can be located in the internal network,

using private IP addresses from ranges defined by RFC 1918 (networks

10.0.0.0/8, 172.16.0.0/12, and 192.168.0.0/16). Or they may be accessible

from the Internet, but restricted to internal users.

Such internal servers are not contained in the Clear Web DataBase

and would be categorized as unknown. In order to

distinguish internal servers from public web servers with unknown

categories, host names or IP addresses of the internal servers can be

listed in the configuration by item

clear-web-db.internal-servers. Private IP addresses from

RFC 1918 are included by specifying

internal-servers private-ip, so they need not be

written explicitly.

If a server from an HTTP request matches some element of the list of

internal servers, it will be assigned category

internal-servers. This category is not used for any

public web server included in the standard Clear Web DataBase.

The Clear Web DataBase file location in controlled by

clear-web-db.db, which defaults to

/data/var/clear-web-db if not set. The database

file is copied (or hardlinked, if possible) automatically to any chroot

directories by the database update script and when the configuration is

applied.

Although it is possible to update the Clear Web DataBase data manually

by copying an updated clear-web.db file to the database

directory, it is recommended to use the provided update script

clear-web-db-update.sh for this purpose. It downloads the most

appropriate full database and/or applies any incremental updates to get the

most recent version of the database, minimizing the amount of downloaded data.

To use this feature, you need to have valid download credentials. These are

configured by clear-web-db.credentials.

If not set, the serial number from the license file is used as both

the username and the password.

The Clear Web DataBase data update script can be executed either

manually by running clear-web-db-update.sh from the root

console (Kernun console in the GUI), or automatically by cron in periodic

intervals. Periodic updates are enabled by placing the clear-web-db

(usually empty) section in the system configuration.

If no record is found for a requested URL in the Clear Web DataBase, the “unknown” result is reported and matched in ACLs. A valid list of categories will be returned for repeated requests to the same URL only after the server becomes a part of the Clear Web DataBase downloaded in some future update. As an option, there is a faster way how to obtain categories for servers not yet known. It is possible to enable the cwcatd daemon that performs automatic local categorization of web pages.

If categories for a requested URL are not found in the Clear Web DataBase, the URL is appended to a queue that is processed by daemon cwcatd. The daemon reads uncategorized URLs from the queue. For each URL, it tries to download the referenced web page. The downloaded page is passed to an automatic categorizer, which tries to assign categories according to heuristics applied to the page content. If it succeeds, the result is stored in a local database. Future requests to a locally categorized server will get categories assigned according to the local database. If categories of the server appear in the downloaded Clear Web database in its periodic update, the result of the automatic local categorization will not be used any more.

Categories discovered automatically by the cwcatd daemon are not as correct and reliable as the contents of the Clear Web DataBase maintained by experienced human operators. But on the other hand, results of the automatic categorization are available almost immediately after the access to a web page with unknown categories.

Automatic categorization of web servers is enabled by adding

subsection local-db to the configuration section

clear-web-db. It instructs the

http-proxy and the icap-server to

enqueue URLs with unknown categories for processing by

cwcatd and to use the results of automatic

categorization. The cwcatd daemon is enabled by adding

section cwcatd.

The Clear Web DataBase or the local database contain the following set of categories:

- Advertisement

Advertising agencies, servers providing advertising banners

- Alcohol / Tobacco

Alcohol, tobacco, breweries

- Arts

Galleries, theaters, exhibitions

- Banking

Banks, Internet banking

- Brokers

Stock exchange information, stocks purchase and sale

- Building / Home

Construction, architecture, e-shops with construction materials and equipment

- Business

Corporate websites, offering actual services and products, Web pages referring to companies (parked domains)

- Cars / Vehicles

Web pages of motorists, motorcyclists, aviators, sailors, producers, vendors and enthusiasts of transportation vehicles

- Chats / Blogs / Forums

Chats, personal blogs, discussion forums

- Communication

Information and communication technology, services of Internet providers, telephone operators, SMS gateways, phonebooks

- Crime

Committing crime, crime protection

- Education

Education, science, research

- Entertainment

Entertainment and sports centers

- Environment

Weather forecasts, live webcams, environment protection, ecology

- Erotic / Adult / Nudity

Erotic acts, half-naked up to naked bodies, erotic tales

- Extreme / Hate / Violence

Attacks against individuals or groups of people, deviations (sadism, masochism, etc.), violence on humans or animals

- Fashion / Beauty

Clothing, body care, make-up

- Food / Restaurants

Restaurants, recipes

- Foundations / Charity / Social Services

Help to handicapped, addicted, underprivileged people or people with special needs, families and children

- Gambling

Gambling for money

- Games

Online and offline games

- Government

Government organizations, agencies, ministries, communes

- Hacking / Phishing / Fraud

Web pages of cracking groups, malicious software, exploitation guides. Does not include anti-hacking and anti-phishing protection

- Health / Medicine

Electronic pharmacies, hospitals, relaxation, massage, spa

- Hobbies

Thematic hobby/interests pages

- Humor / Cool

Humor, jokes, pranks

- Illegal Drugs

Drugs, narcotics

- Instant Messaging

Real-time message transfer

- Insurance

Insurance companies

- Internal Servers

Internal web server, includes private (RFC 1918) IP addresses and servers declared as internal in the configuration

- IT / Hardware / Software

Hardware and software retail, service, support

- IT Services / Internet

IT and Internet products, services, domain names

- Job / Career

Human resource agencies, job offers

- Kids / Toys / Family

Advice, instructions, websites for kids and family

- Military / Guns

Historic information, army-shops

- Mobile Phones / Operators

Mobile phones and accessories, telephone operators

- Money / Financial

General financial consultancy, financial servers

- Music / Radio / Cinema / TV

Web pages of music bands, pages about music and movies, TV guides, cinema programme

- News / Magazines

Live news, tabloids, journals. Does not include Web pages aggregating news from other sources

- Peer-to-peer

Peer-to-peer networks for data transfer (software, music, movies)

- Personal / Dating / Live Styles

Personal Web pages, pages of musicians and bands, gossips

- Politics / Law

Web pages of political parties, pages about politics and law

- Pornography

Videos for download, photos, e-shops

- Portals / Search Engines

Internet search engines, covering either a large number of areas or specific fields. Does not include Web pages searching their own site only

- Proxies

Servers that provide access to other Web pages to their clients, e.g. in order to hide the client's identity or to circumvent network access restrictions

- Real Estate

Real estate servers, companies

- Regional

Web pages of municipalities and regions

- Religious / Spirituality

Churches, religious themes, esoteric pages

- Sale / Auctions

Auctions, bazaars, sale and purchase

- Sects

Sects

- Sex Education

Professional information about sex

- Shopping

Stores, advertising sites, e-shops with a shopping cart, which must be operated at the top-level domain

- Social Networks

Social structures made up of people connected by friendship, family, common interests and other relations

- Sports

All sports and disciplines, including extreme sports

- Streaming / Broadcasting

Internet TV and radio

- Swimwear / Intimate

Lingerie, underwear, swimsuits, e-shops, producers and sellers

- Translation Services

Online translation, translational services

- Travelling / Vacation

Timetables, travel agencies, public transportation companies, castles, chateaus

- Uploading / Downloading

Online data storage, photo albums, file-sharing servers

- Warez / Piracy

Illegal distribution of copyright-protected content, links to software, serial numbers, movies, password exchange forums

- Web Based Mail

Free Web-based mail providers

- Web Hosting

Web sites providing space on their servers to third parties

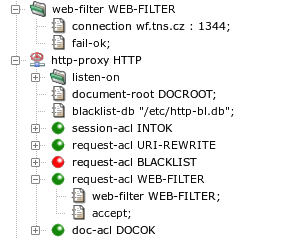

In addition to using the internal Kernun Clear Web DataBase, it is possible to cooperate with an external Web filtering service. Only one type of such a service is supported—the Proventia Web Filter. It is considered a legacy feature and Kernun Clear Web DataBase is recommended as replacement.

Proventia Web Filter runs separately from Kernun UTM on a machine with a Microsoft Windows, Linux, or FreeBSD (with Linux emulation) operating system. It is configured by its own graphical management console, available for Microsoft Windows. In order to work with Kernun UTM, Proventia Web Filter must be configured as described in section Web Filter of manual page http-proxy(8). Especially, ICAP Integration must be enabled. Moreover, if the Web filter rules take user names into account, User Profile Support must be enabled.

The relevant part of HTTP proxy configuration is depicted in Figure 5.76, “An HTTP proxy configured for use of an external Web filter”. The parameters of the connection to the Proventia Web

Filter server are specified by section web-filter. Note

the port number 1344, which is used by default for the ICAP protocol by Proventia

Web Filter. The sample configuration contains also a fail-ok

item, which means that all requests are to be accepted if Kernun UTM

cannot communication with the Web filter. Without this item, all requests

would be rejected until the connection to the Web filter would be restored.

Requests that are accept-ed by

a request-acl with a web-filter item will

be forwarded to the Web filter server. If the Web filter accepts the

request, the proxy will continue with the normal request

processing[40].

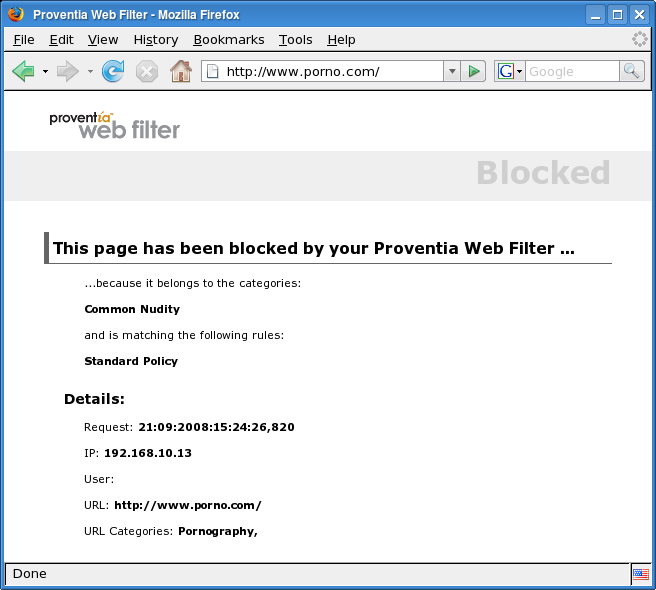

If the Web filter rejects the request, the proxy will terminate the processing

of the request and return an error HTML page to the client. An

example of a Web site blocked by the Web filter is shown in Figure 5.77, “A Web server blocked by the Web filter”.

[40] The request will be sent to the destination server and its response will be returned to the client.